One thing is now obvious, Kubernetes has changed the IT landscape for the better. For the first time in a long time, we have a universal API for the configuration of IT infrastructure, applications, and their components. An IT “babel-fish” if you may! (sorry, 45 year old CEO here, and Hitchhikers Guide to the Galaxy is one of my all-time favs).

If you think back just 5 years, how did you deploy and manage your server and application estate?

You really only had two viable options : either manually installing and configuring every component, or for the fortunate, by investing in automation through Infrastructure as Code. Manual configuration was far and away the most common mechanism as only the most cash-flush IT teams could afford to invest the time and tooling to deliver automation.

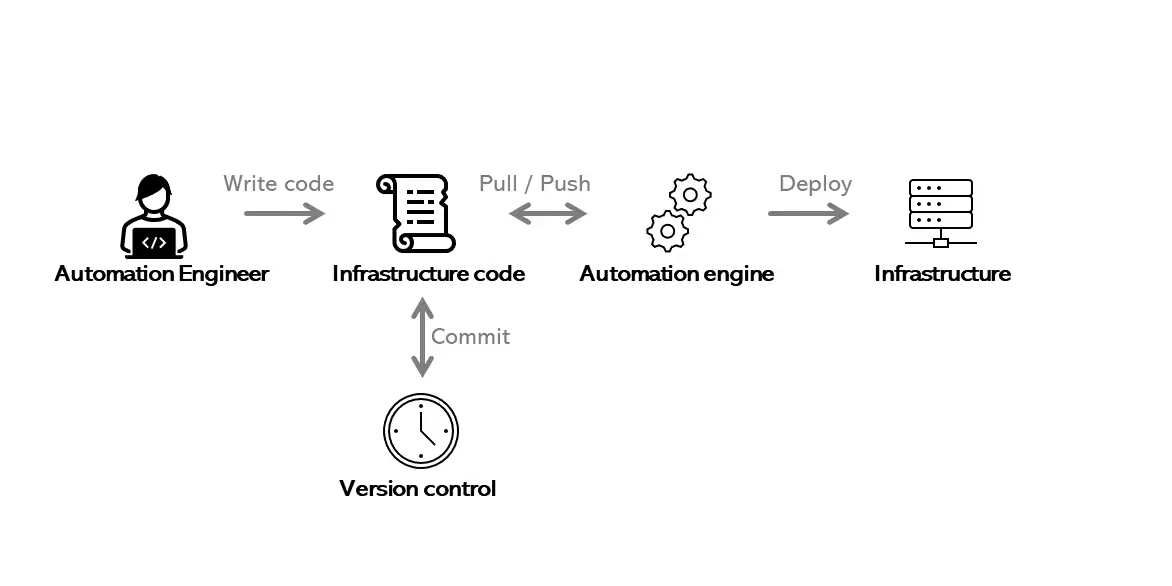

But, for those that did embrace automation, how were those first incarnations of Infrastructure as Code delivered?

At the time, we had dozens of proprietary vendor API integrations we needed to maintain; the server hardware layer (mainly blade chassis), the virtualisation layer, the storage subsystem, the network switches, the firewalls, and the load balancers. If you were lucky enough to have a single vendor for each element, then you likely had maybe 10 discrete APIs, but if you were multi-vendor, then wow!. We also needed to maintain bash/powershell scripts for the configuration of the base operating system for each type of server provisioned, and we needed to build application silent install scripts to get apps installed and configured (remember MST files??). It was a mess. If you can you remember tools like SaltStack, Ansible, Puppet/Chef, CFEngine, and VMware Configuration Manager, then you will likely know what I'm talking about. For anyone that wanted to create and maintain these scripts, it was a substantial long-term investment. The only organizations that invested in this automation were those that had highly dynamic server and application landscapes that warranted the time savings from automation, or those that wanted the comfort of being able to rebuild their environments on-demand.

-3.avif)

Fast forward to now, with Kubernetes’ singular consistent API format, the awesomeness of Kubernetes Operators / CRD’s, a fair bit of YAML, and a decent helping of knowhow, it's relatively straight forward to have your entire landscape declared, deployed, maintained, and supported by Kubernetes monitoring via code. And with your landscape defined as code, you are able to deploy and maintain with 100% predictable repeatability every time. In fact, most organisations that adopt Kubernetes consider it almost mandatory that a switch to “everything as code” goes part-in-parcel with the transition.

But, just like Newtons quote “what goes up, must come down”, for every positive benefit, there is a contrasting negative risk, and in the case of “everything as code”, it comes down to two things... 1) risk of errors making their way into Prod, and 2) the skills required to automate.

- If you automate something, then your automation had better be perfect. Unlike humans, code is not able to dynamically adapt to unexpected changes. Code expects to do the same thing every time and get the same result every time. Automation needs error handlers to be created in order to be relied upon, and these error handlers need to be thorough. With automation, it is incredibly easy to inadvertently apply a defective configuration and take an entire system offline (here’s looking at you CloudFlare, oh and most of the other Cloud Native enterprises that have outages due to automation gone awry). So whenever automation is deployed, you would be negligent not to invest in development and pre-prod environments in which to test the automation. Also, if you have a fully automated deployment, then you need to ensure that you have an equally significant investment in writing health checks, which are used to automatically rollback any changes if there are unintended consequences.

- Whilst Kubernetes has made “everything as code” possible, you cannot just take any Windows or Linux admin, and make them into automation experts overnight. It takes time to learn YAML constructs, it requires a certain ‘finesse’ to write a YAML file from a standing start. And in order to automate Kubernetes, you need to know how the API works, what each API call does, and what the valid inputs (and expected outputs) are for each. You also need to be monitoring Kubernetes API deprecation notices, and ensure your automation continues to function correctly across releases. This all translates into the need for your automation (DevOps/Platforms) team to be Kubernetes experts, nothing less will provide the required quality (at least, not initially).

So, how can you unlock the benefits of Kubernetes without having to dive headfirst into code?

Easy. With Templates, commonly implemented via HELM charts, or the more “in vogue” Kustomize files.

But to take it a step even further, how do you enable the non-container-native developer or engineer to use these? If there is one thing that Apple and Google can claim fame for, it's their App Stores, and the simplicity of deploying any application with just a single action. This consumerization of application deployments is what Kubernetes needs, a “click to deploy” marketplace, that does not require any knowledge of adjusting HELM values.yaml files, or how to manipulate Kustomize.

There are a number of vendors trying to deliver this marketplace capability, under the guise of an “Internal Developer Platform”. Each vendor bringing their own unique solution for this requirement, and for Portainer, we do this using our “Templates” function.

Portainer Templates allow an internal expert to completely define the state of an application, and then to publish that configuration state to other internal users to simply “Click to Deploy”. The users deploying their applications from the template do not need to customize anything. This is the ultimate in simplicity and usability.

OK, so that’s well and good, but what about those organizations that want to get on the “everything as code” bandwagon? Does Portainer stop them from doing this? Is Portainer just a click-ops UI? Not at all. Portainer has a built in GitOps engine, and that engine can be configured to source all application definitions from Git, and then to deploy applications based on these definitions. Deploying in this way removes the UI from the equation, ensuring that applications can be redeployed at any time, and they will always be deployed in exactly the same way.

So, regardless of where you are on your internal journey, Portainer can help you to standardize deployments, either using Templates, or through our native GitOps engine, and with the magic of Kubernetes, the breadth of scope for configuration is vast.

Here at Portainer, we love what Kubernetes enables, we just think that it should be easier to experience the benefits of Kubernetes, and that any IT team, of any size/maturity should be able to fully exploit the power of Kubernetes.

Neil