KubeSolo was specifically designed to tackle the unique challenges faced at the device edge, the furthest reaches of your network. When we say "device edge," we’re usually referring to environments typical in IoT and Industrial IoT device deployments. These are scenarios where hardware resources are minimal, networks might be intermittent or unreliable, and your devices must function reliably in isolation.

So, what exactly is KubeSolo?

KubeSolo is an installable, single-binary Kubernetes distribution customized specifically to run efficiently on highly resource-constrained edge devices.

This FAQ aims to clarify common queries about KubeSolo, highlight how it differs from other lightweight Kubernetes distributions, and help you decide when it's your best choice.

1. What is KubeSolo actually designed for?

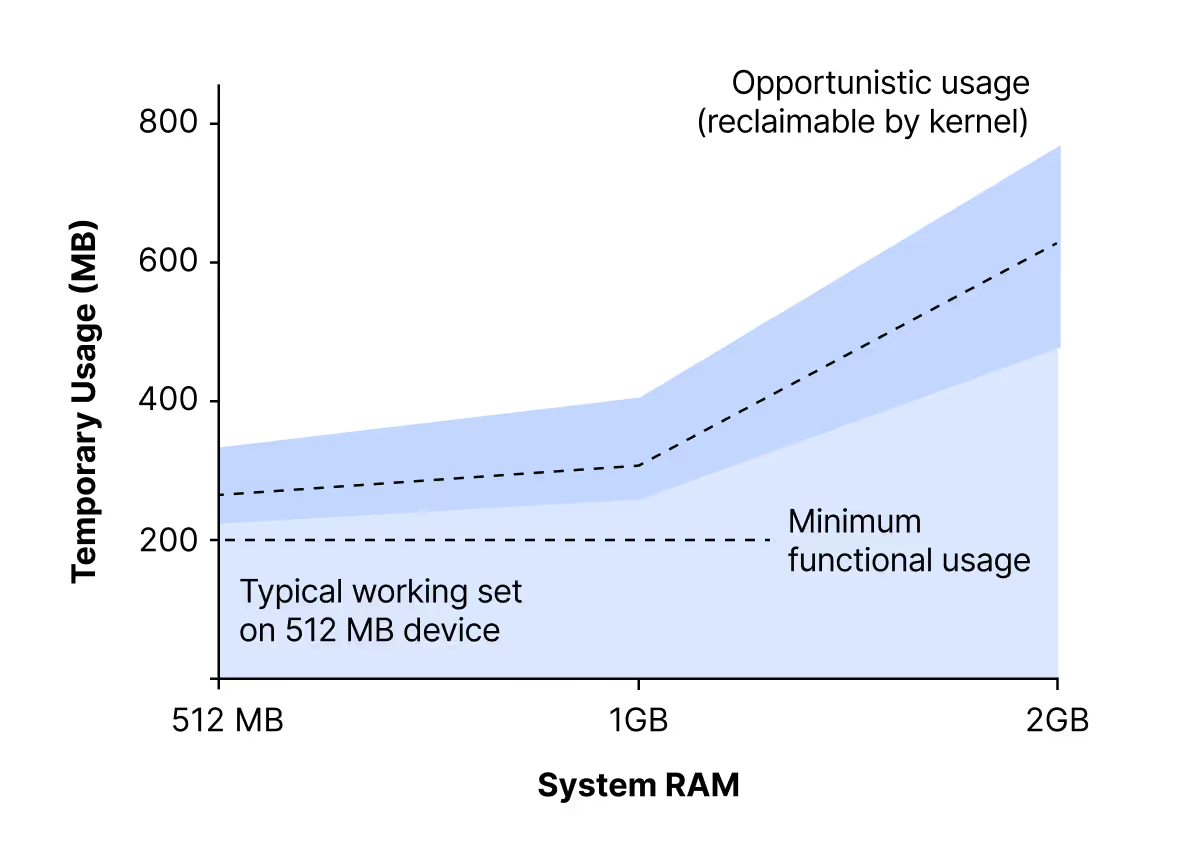

KubeSolo was built explicitly for extremely resource-constrained environments, such as devices with less than 1GB of RAM, basic CPUs, and simple SD card storage. If your hardware consists of just 512MB of RAM and a single-core ARM processor, then KubeSolo was created specifically with your needs in mind. We've optimized it carefully to run comfortably within these tight resource constraints, typically requiring only around 200MB of RAM during normal operation.

2. If my devices have 1GB or more RAM, should I still choose KubeSolo?

Probably not. If your devices have 1GB or more RAM, then standard lightweight Kubernetes distributions such as K3s, K0s, or MicroK8s are generally a better fit. These distributions are CNCF-certified, widely adopted, and more suitable for general-purpose use. However, keep in mind that K3s and K0s usually require about 400MB of RAM to operate comfortably, whereas MicroK8s typically needs around 2GB to remain stable.

3. Is KubeSolo CNCF-certified, and will it ever be?

Currently, KubeSolo is not CNCF-certified. The customizations we've made to reduce its memory footprint to just 200MB mean it doesn't fully meet CNCF’s certification criteria today. However, we plan on actively engaging with CNCF and intend to campaign for recognition of these necessary optimizations.

4. Why should I use KubeSolo or Kubernetes rather than Docker or Podman?

That's a great question! Docker and Podman remain the lightest ways to run containers, ideal if your top priority is absolute minimal resource use. However, Kubernetes has become a universal standard across the software industry, particularly in IoT, Industrial IoT, and Industry 4.0. Many off-the-shelf industrial software packages explicitly require Kubernetes. KubeSolo represents an ideal compromise, allowing you to leverage Kubernetes even within the tightest constraints.

5. Why does KubeSolo sometimes appear to use more than 200MB of RAM in Linux monitoring tools?

If you're monitoring memory usage, you may notice that KubeSolo appears to use more RAM, especially on devices with more than 1 GB of available memory. This higher usage is due to Linux assigning available memory for caching purposes to optimize performance. When your device encounters memory contention, KubeSolo automatically releases this cached memory back to the host, comfortably operating within its actual target of around 200MB.

6. Does KubeSolo support multi-node or multi-cluster configurations?

No, KubeSolo is specifically optimized for single-node deployments. To centrally manage many standalone KubeSolo instances (for example, hundreds of edge devices), you’ll need a multi-cluster management solution like Portainer or CNCF’s Open Cluster Management Project.

Portainer, for instance, gives you centralized visibility, configuration, and control over each KubeSolo instance through a single user interface.

Alternatively, you could also manage these devices through custom scripting or centrally deployed GitOps tools, but Portainer significantly simplifies this by removing manual effort and providing clear oversight.

7. How does KubeSolo differ from KubeEdge?

KubeEdge employs a distinctly different architecture. It requires edge devices to act as worker nodes within a centrally managed, network-connected Kubernetes cluster. While suitable for some scenarios, this approach isn't optimal in environments with unreliable or intermittent network connectivity. KubeSolo, by contrast, provides fully autonomous, self-contained single-node Kubernetes clusters, designed explicitly for offline or standalone edge deployments.

8. How do I manage KubeSolo?

KubeSolo is standard Kubernetes at its core. Therefore, you can manage it using any Kubernetes client-side management tool of your choice, such as VSCode, OpenLens, Headlamp, K9s, or Portainer. You can even use ArgoCD if you prefer (remotely connecting to KubeSolo using the Kubernetes API). This flexibility lets you integrate seamlessly into your existing Kubernetes management workflows.

9. What hardware is KubeSolo designed to run on?

KubeSolo is specifically designed for embedded compute hardware commonly found in industrial IoT deployments, such as the Wago CC100 series or Bosch Embedded Compute devices. Similar small-form-factor hardware from other PLC vendors, like Siemens (SIMATIC IOT2000), Phoenix Contact (PLCnext Technology AXC F 1152), Beckhoff (CX7000 Embedded PC), or Advantech’s compact edge computing series, would also be excellent fits, provided they allow Linux and Docker workloads on their smallest, most resource-constrained devices.

We hope this helps clarify exactly what KubeSolo is, how it differs from other solutions, and when it's the best choice for your device edge projects. If you have more questions, we’re here to help you navigate your Kubernetes journey at the extreme edge!