Recently I was in discussions with a client that wants to use Kubernetes on their factory floor IIOT devices (ATOM CPU, 1GB RAM), and they inquired as to which lightweight Kubernetes distribution Portainer officially recommends. I mentioned that we don't have any formal recommendations and that all three (k0s, k3s, and MicroK8s) are relatively similar, and all claim to be optimized for low-resource deployments. I recommended the client try all three themselves.

Well, the client came back to me, complaining that they couldn't get any to function reliably and that I should try them myself. So I did, and the results surprised me!

Let me show you my results ...

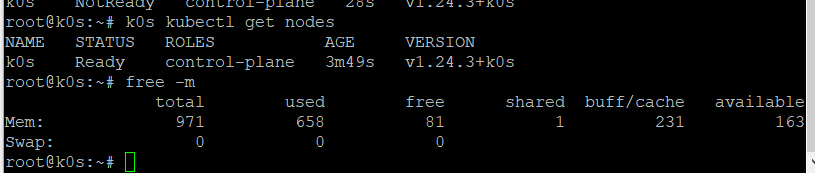

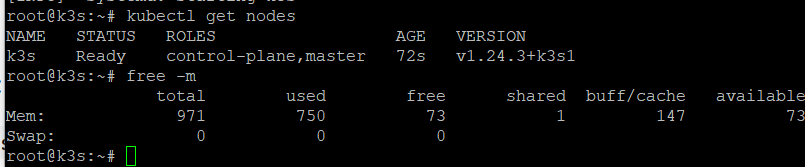

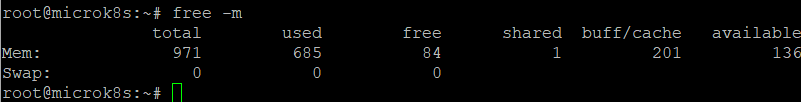

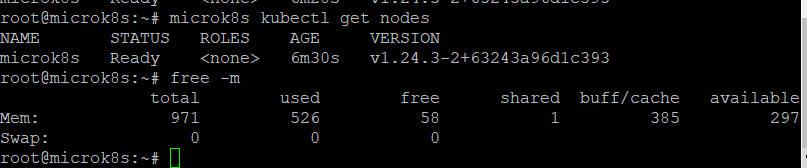

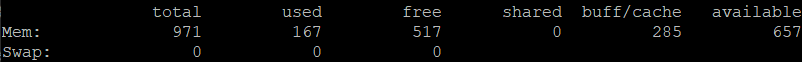

I deployed 3x VMs, each with 1 CPU and 1 GB RAM. This is to represent the constrained node that would often be seen in Edge Compute environments. On each of these, I deployed Kubernetes using the default settings. I then ran a simple "free -m" command on each to see how much RAM was being used to "idle" the cluster.

Note, ignore the "free" value as it's misleading. The actual free is a combination of free and reclaimable buffer cache.

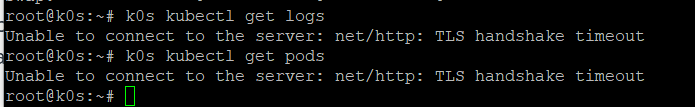

K0s

K3s

MicroK8s

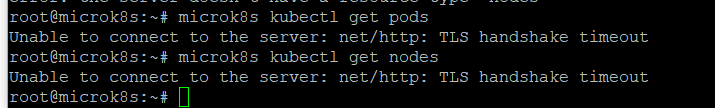

Whilst MicroK8s installed, i was unable to run ANY MicroKs8s commands.

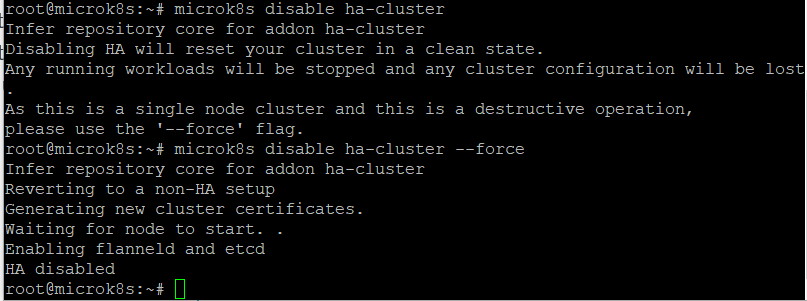

I contacted Canonical to ask their advice about the above, and was told that by default, MicroK8s installs the Calico network driver and uses dqlite rather then etcd, which is not needed for single-node deployments. They recommended I disable HA (switches from Calico to Flannel and dqlite to etcd) using the command "microk8s disable ha-cluster" and then retry my tests. So I did this.

The resulting memory used dropped by 159MB :

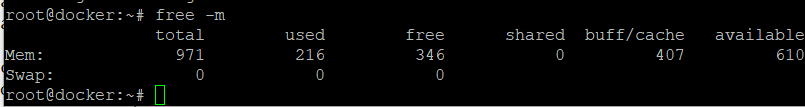

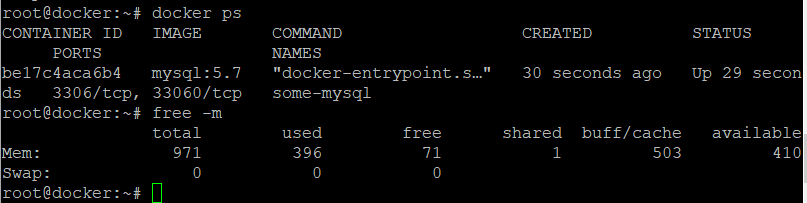

Out of interest, I wanted to see what a standard docker deployment would use on the same VM spec. It only uses 216MB of RAM.

Base OS

Ok, but this memory usage includes the base OS... and that is correct. It's why the base OS should be running as "minimal" services as possible. My deployment was based on Ubuntu Minimal, and the VM with nothing running on it at all uses 167MB. Again though, this is a real-life scenario.

OK, so now we have our Kubernetes Single-Node Clusters up.

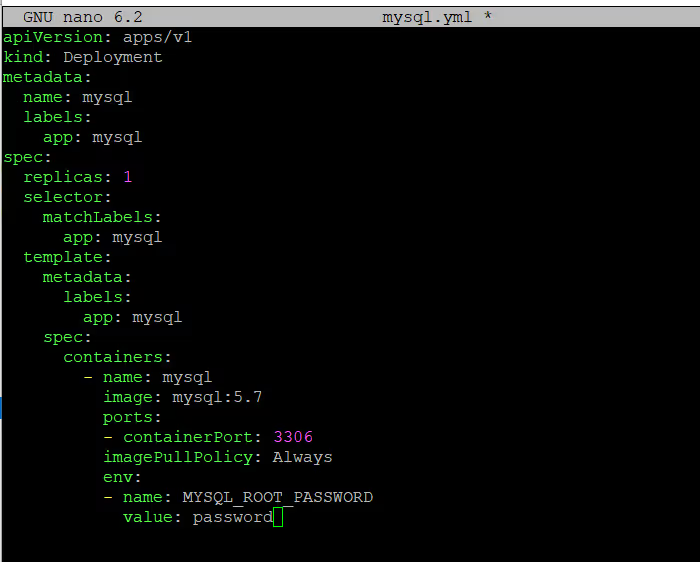

Let's try and deploy a simple MySQL instance.

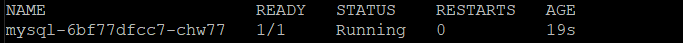

I see the pod has started on all 3 platforms,

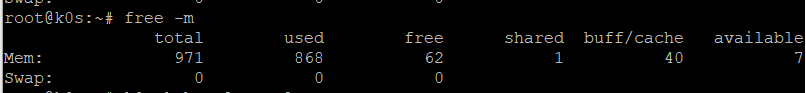

so how about used memory now ... up to 868MB of 971MB total.

Unfortunately, once the POD started, K0s, K3s, and MicroK8s API servers stopped responding to further API commands, so I was unable to issue any kubectl commands from this point forward. This effectively rendered the environments unusable.

I wanted to see the same thing on the Docker Node, so deployed MySQL using 'docker run mysql' and saw the used memory was only 396MB:

My Summary

None of the lightweight Kubernetes distributions (or at least, their out-of-the-box deployment) seem to be suitable to be used on devices that have 1GB of RAM or less. Not if you want to actually deploy any applications on them, that's for sure. I would recommend that lightweight hardware continues to use Docker standalone if containers are needed to be used.

Quick Table to summarise.

DistributionUsedBase OS, no Kubernetes167MBK0s658MBK3s750MBMicroK8s685MBMicroK8s (no HA)526MBDocker216MB

One other thing to note. Kubernetes API server generates a pretty decent amount of Disk I/O and so if you run on CF cards, expect them to to wear out in a relatively short space of time, and also expect to see a lot of API timeouts occuring (leading to kubernetes instability). At a minimum, you need to run on SSD storage (not even USB stick storage is fast enough).

Now, as an added bonus...

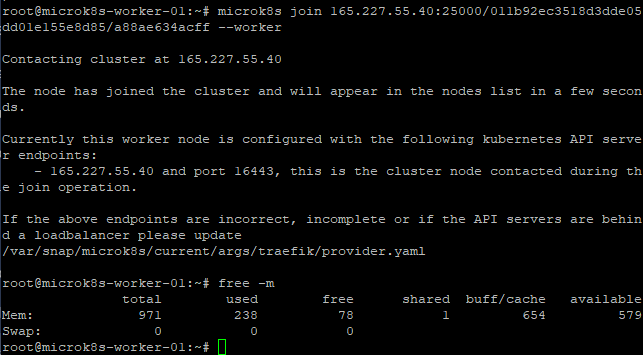

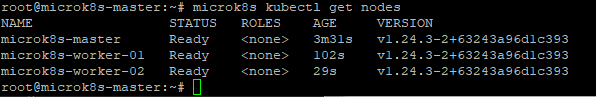

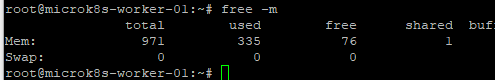

Out of pure interest, i deployed 2x more MicroK8s nodes, and configured them as workers, joining them to a standard MicroK8s cluster node (not the non-HA node), as I wanted to see what memory usage would be for a pure worker node.

Interestingly, the memory usage is substantially lower, at only 238MB. This is impressive, given the cluster is running Calico networking.

Note though, once i enabled the cluster with dns, RBAC, hostpath-storage, and metrics-server (all of which are needed in production), memory usage increased to 335MB.

So, if you absolutely have to use Kubernetes at the edge, on lightweight hardware, you "can" as long as you have a node with at least 2GB of RAM that will operate exclusively as the master node. Clearly this adds a single point of failure, but Kubernetes can be tuned to tolerate an extended outage of the master node without taking the cluster and all workloads offline.