“The cloud will take over every datacenter." That was the mantra that many engineers were hearing in 2015-2016. Long gone were the days of on-prem workloads. Everything was going to live in the cloud.

Fast forward to now (2023) and that reality didn’t occur.

Sure, there are a lot of workloads in the cloud and it’s a billion-dollar business. The thing is, there are still a lot of workloads on-prem.

However, the on-prem workloads, especially for Kubernetes, doesn’t come without complication.

In this blog post, you’ll learn a bit about the complications of self-hosted Kubernetes, including the pros with it, and why you may want to steer towards the cloud-hosted direction.

On-Prem vs Managed Kubernetes Service

Have you ever seen Kelsey Hightower's Kubernetes-The-Hard-Way tutorial?

If not, you should check it out just from an educational perspective as it’s quite interesting. Even if you don’t go through it, you can read it from a theoretical perspective to see what’s truly happening underneath the hood when it comes to Kubernetes. From provisioning a Certificate Authority (CA) to Bootstrapping Etcd manually. As you read/go through it, you begin to see, understand, and overall appreciate the complexity of Kubernetes. It’s sort of a beautiful thing.

However, in today’s world, engineers don’t really want to go through all of that.

Especially when they have KaaS (Kubernetes-as-a-Service) or Managed Kubernetes Service (same thing, just different names depending on who you ask) offerings. Even with a middle approach like bootstrapping with Kubeadm, which is “raw Kubernetes”, but deployed in an automated fashion on Virtual Machines.

Let’s break down five key components for thinking about on-prem vs a managed offering.

- Cost.

- Complexity.

- Autoscaling bare metal vs cloud.

- Third-party solutions vs built-in.

- Hybrid deployments (Azure Arc, Azure Stack HCI, EKS Anywhere, etc.)

Cost

When thinking about cost, there are a few ways to look at it.

From an on-prem perspective, the biggest costs are:

- Running the workloads in a data center.

- Licensing.

- Hiring dedicated engineers that know how to run a data center.

From a managed service perspective, the biggest costs are:

- Subscriptions to the cloud.

- Paying per hour or per reserved for resources.

- Hiring dedicated engineers that know how to run the environment.

You’ll notice that “hiring dedicated engineers” comes up in both lists, and for good reason. Regardless of where you’re running workloads, you’ll have to hire professionals to run the environment. The biggest difference is “how” they’re running the environment. If you’re in the cloud, you don’t need an engineer that knows how to rack and stack servers. You need an engineer that knows how to manage cloud services with softwaredefined infrastructure.

In short, the biggest difference in the cloud vs on-prem from a cost perspective is paying for hardware/hosting vs paying a subscription.

Complexity

There’s a whole section on the complexity of running on-prem vs a managed offering coming up, but before we get to that, keep one thing in mind. From a complexity perspective, the biggest piece is having engineers that understand the underlying components of Kubernetes inside the cluster vs engineers that know “of them”, but haven’t been studying them for years.

When scaling out Kubernetes Nodes, it’s of course going to be easier in the cloud.

Why? Because you have “unlimited resources” (as long as you keep paying for them). Need a new VM that’s running a worker node in AKS or EKS? No problem. Just turn on the “auto-scaling” feature.

On-prem, you have to ensure that the hardware, VMs, and resources (CPU, RAM, storage, etc.) are readily available before you start scaling out. The best way to do this is with a hot/cold method, as in, having dedicated Worker Nodes “turned off”, but ready to accept traffic if needed. However, the negative of this is you have hardware sitting around doing nothing.

Third-Party Solutions

Kubernetes is a house that builders just put up. It has the architecture built to make things work, but there are a lot of pieces that aren’t out of the box. Authentication isn’t available out of the box. Secure communication between services isn’t out of the box. Policy management isn’t out of the box. GitOps isn’t out of the box.

With on-prem solutions, you need to set up and configure a lot of third-party tooling to make this happen. For example, if you want GitOps, you have to manually deploy Controllers and various permissions/resources to make it work properly. If you’re using Portainer, you don’t have to because it’s all built-in regardless of if your Kubernetes clusters are running on-prem or not.

In the cloud, a lot of third-party solutions are available. For example, in Azure

Kubernetes Service, there’s Azure Active Directory, GitOps, and Service Mesh solutions out of the box. Because of that, utilizing these third-party solutions in the cloud simply makes things easier.

Hybrid

If you’re still thinking to yourself “yes, but I have on-prem needs”, that’s totally fine! A lot of organizations run Kubernetes on-prem. Mercedes (car company) runs 1,000+ Kubernetes clusters on-prem. However, they have a team to support it. Quite frankly, a lot of organizations don’t have the proper team to perform that action.

If you don’t have a team that can support it all, but still want to utilize on-prem Kubernetes clusters, you can think about hybrid solutions.

A few are:

- EKS Anywhere

- Azure Stack HCI

- Azure Arc

- Google Anthos

What the above solutions allow you to do is have on-prem workloads, but manage them in the cloud. That way, you get the benefits of the cloud without being in the cloud.

However, pointing out a huge piece to the puzzle here, having the on-prem workloads still requires engineers that know how to configure them. Managing the on-prem workloads in the cloud makes things easier, but not “as easy” as running Managed Kubernetes Services.

What Managed Kubernetes Service Offerings Give You

When you run Kubernetes on-prem, there are several components that just “aren’t there” by default.

A great example of this is load balancers.

Let’s say you want to run load balancers, which you most likely will in Kubernetes. If you’re on-prem, there’s no resource out of the box that provides load balancer functionality. You’d have to use something like Metallb. In the cloud, it’s different. If you use a LoadBalancer type for a Kubernetes Service, a cloud load balancer is automatically deployed for you.

Another big piece is the scaling. You learned about autoscaling previously, but this is huge. Compute resources are “just ready” for you in the cloud. You don’t have to do much other than pay for it. With on-prem, you must ensure that the hardware is readily available prior to any new load that you weren’t expecting to occur.

The Overall Knowledge Required

In the previous section, under the Complexity piece, there was mention that you’d learn the overall complexity of what’s required to manage on-prem Kubernetes.

Here’s the thing - teaching the knowledge required would literally take 10-15+ books.

Why? Because when you’re running Kubernetes on-prem, it’s not just about running Kubernetes itself.

It’s about understanding how infrastructure works. How virtualization platforms like ESXi work. Having dedicated team members that are:

- Only doing the virtualization pieces.

- Only doing the operating system piece.

- Only doing the storage piece.

- Only doing the networking piece.

Not to mention, outside of the infrastructure layer, the actual Kubernetes layer. Understanding the internals of the Control Plane and the Worker Nodes. Understanding how the Scheduler works. Understanding how to deploy, scale, and secure Etcd.

Understanding various CNIs and how/when/why/where to install them.

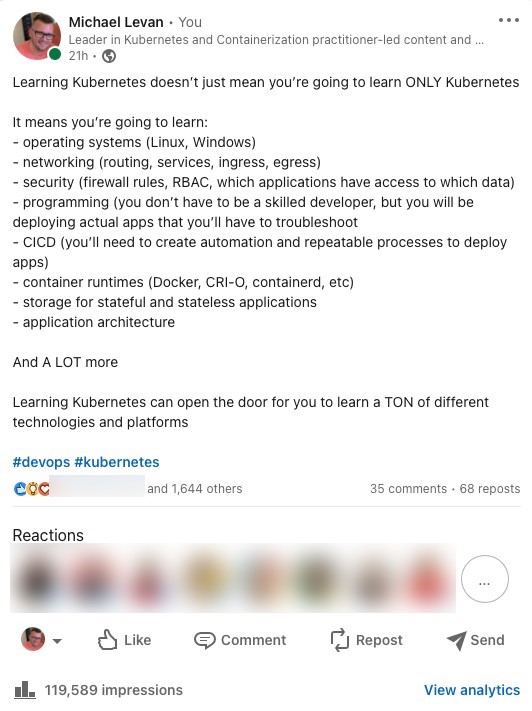

I recently posted something about learning Kubernetes and the other pieces that you need to know. A lot of people tended to agree with over 1,600 likes, 35, comments, 68 reposts, and over 100,000 impressions.

Why did this small post gain that much traffic? Because learning Kubernetes, especially with self-hosted, requires a literal team. 5-10 people at the least that are only dedicated to the Kubernetes environment.

Now, make no mistake, do you still need to know networking, CNIs, storage, and the underlying Kubernetes components in Managed Kubernetes Services? Absolutely you do. You just don’t need to know/understand them at the level of running Kubernetes on-prem.

The way I like to explain it is if you’re running Kubernetes on-prem, you have to understand 100% of Kubernetes and the requirements (infra, networking, storage, etc.) to run it.

If you’re running Managed Kubernetes Services, you have to understand 50%.

An Entire Team

Do you need an entire team to run Kubernetes on-prem? Yes.

Do you need an entire team to run Kubernetes in the cloud? Yes.

As mentioned in the previous section, there’s a lot that’s needed when running Kubernetes both on-prem and in the cloud. The biggest difference is that the team will be different.

From an on-prem perspective, you need:

- System Administrators.

- Network Administrators.

- Storage Administrators.

- Security Administrators.

Just to get the clusters up. Maybe if you’re lucky, you have one person that knows how to do all of it. The question is - do they know how to do all well? For one cluster, sure, no big deal. How about for five? Or ten? How about scaling across regions? That’s when knowledge becomes moot and “more teammates” becomes the need.

In the cloud, you need the same type of engineers that understand how to do the Ops piece, but more from a software-defined perspective. They don’t need to know how to rack and stack or run cables.

The biggest piece here, which hasn’t been mentioned yet, is that with Managed Kubernetes Services, the control plane is abstracted away from you. Etcd, Scheduler, and Controller Managers are abstracted away from you. The managed service that you’re using manages that. You just have to worry about the Worker Nodes. Everything that you have to do is essentially Kubernetes API-related. It makes the experience far easier from an Ops perspective and puts more emphasis on a Dev perspective.

💡 Sidenote: Do you need an entire team to run Kubernetes in Portainer whether it’s on-prem or in the cloud? Absolutely not. Let Portainer take care of that.

You take care of deploying your apps.

Microk8s - A Middle Ground

Not going into a crazy amount of detail as there’s much to learn prior to figuring out a middle ground, there is one - microk8s.

Microk8s markets itself as “Zero-ops Kubernetes”.

The cool thing about Microk8s, other than it being a smaller footprint compared to other Kubernetes installations, is it provides enterprise-ready Addons. For example, out of the box, you can enable Addons for Istio, Cilium, Grafana, and a lot of other Kubernetes centric tools without having to manually install them.

You can install it on Windows, Linux, and macOS. It’s great for Dev clusters to just test out some workloads locally or from a production perspective to scale workloads easier, which ends up saving money because of the smaller footprint that Microk8s provides. You can also get (buy) enterprise support for Microk8s, which gives the relief feeling of having a team that’s there to help.

Security On-Prem vs Managed Kubernetes Service

Kubernetes security is a mess overall.

I’d love to write here that it’s better in the cloud vs on-prem, but the truth is, it’s probably worse in the cloud than on-prem. In the cloud, it’s easy to just “click a button” and poof, your Kubernetes cluster is exposed to the world with improper authentication and authorization.

On-prem, it’s harder to make a Kubernetes cluster insecure because you have to manually configure networks, permissions, and protocols.

However, both on-prem Kubernetes and Managed Kubernetes Services suffer from the same secure implications:

- Lack of awareness around Namespaces.

- OPA and other cumbersome security implementations including security policies for Pods.

- RBAC

✅ Sidenote: Yes, you can perform literally every security action mentioned here right from Portainer for both on-prem and managed k8s.

First, there’s the overall understanding of segregation in Kubernetes. The first piece everyone should look at and understand is Namespaces. Namespaces give you the ability to install and deploy containerized workloads in separate areas. Namespaces give you the ability to, for example, have Service Accounts that are tied to a particular namespace to only deploy containerized apps for said namespace. You can also ensure with namespaces that proper RBAC per namespace is configured. However, namespaces don’t do a whole lot else from a security perspective. For example, Pods can still talk to other Pods from a network perspective even outside of their own namespace (this is where Network Policies and/or Service Mesh come into play).

After namespaces, there’s central policy enforcement and management. Whether you want to implement best practices for Pods (like not using the latest container image) or lock down Pods (like not allowing privileged containers to run), you can do all of that with policy management. The problem is, there’s no policy management out of the box. You have to implement a third-party solution like Open Policy Agent (OPA) or Kyverno.

Arguably the absolute largest headache for engineers working with Kubernetes is Role Based Access Control (RBAC). There is a way to manage RBAC in Kubernetes. The problem is after you’re done implementing it, you can’t remember what you did due to banging your head against the desk too many times. Kubernetes with RBAC out of the box allows you to manage authorization (permissions) for users, groups, and service accounts, but the problem is that out of the box, Kubernetes doesn’t give you an authentication solution. That means you have to go to a third-party OIDC solution to properly manage users.

Now, there is a way to manage users without a third-party solution - by creating Linux users and attaching certs to them. I only bring it up because it’s “possible”. However, please, PLEASE don’t put yourself through the pain of doing this. For one user, maybe it seems fine, but how about if you have 50+ users authenticating to Kubernetes? It’s so not worth it.

Closing Thoughts

As you move through this post, you’re going to most likely be overwhelmed with what’s needed for Kubernetes to actually work properly. That’s okay. The truth is most engineers are. The important piece to remember is that overall, from a production perspective, using a Managed Kubernetes Service/KaaS offering is simply going to make your life easier. The biggest thing that people dislike about this option is vendor lock-in, but let’s face it. Regardless of where you run any platform, there’s always a vendor you’re using and there’s always some type of lock-in.

Try Portainer

If you'd like to give Portainer a try, you can get 3 nodes free here.

.jpg?width=290&name=Image%20from%20iOS%20(2).jpg)

COMMENTS