Portainer's Universal Container Management Platform

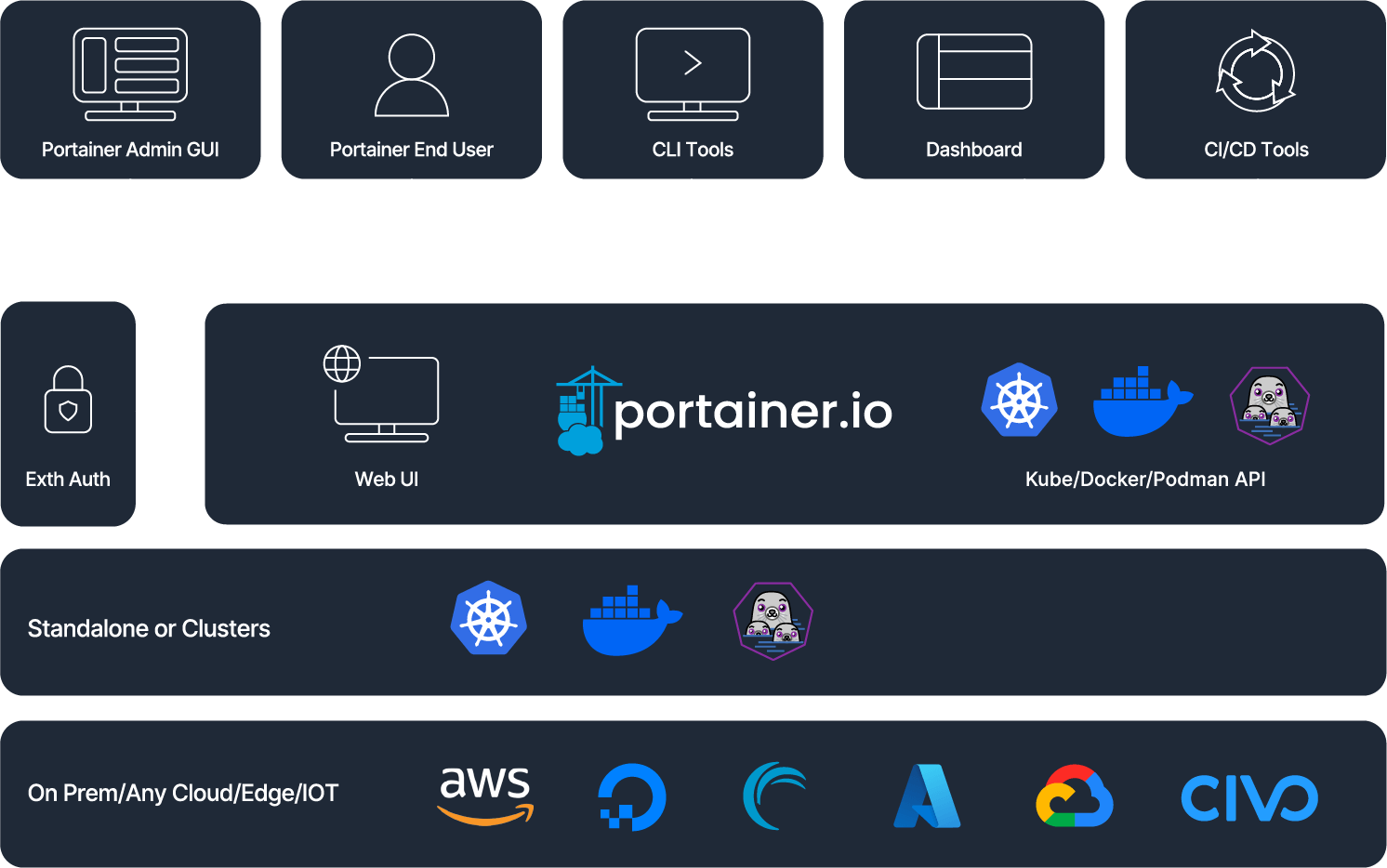

Portainer empowers you to securely manage containerized applications across Docker, Docker Swarm, and Kubernetes, and across Cloud, Hybrid Cloud, On-Premise and/or Data Center.

Unleash the power of containers with Portainer

In the world of containerization, where complexity often overshadows innovation, Portainer stands as a beacon of simplicity and accessibility. As the universal container management platform, Portainer is more than just a tool—it's a solution that empowers everyone, from individual developers to large enterprises, to harness the full potential of container technology without the burden of technical intricacies.

The Problem: A World Drowning in Complexity

In today’s fast-paced digital landscape, container technology has become the backbone of modern software development.

However, managing containers can be a daunting task.

The steep learning curves, intricate command-line interfaces, and the sheer volume of tools required can overwhelm even seasoned professionals. For many, the promise of containers - agility, scalability, and efficiency - is overshadowed by the complexity of managing them.

The Solution: Portainer

Portainer transforms this complexity into simplicity.

It is the universal container management platform designed to make containerization accessible to all. Whether you’re managing Docker, Podman, or Kubernetes, Portainer provides a unified, intuitive interface that simplifies the process, allowing you to focus on what truly matters: innovation.

The Vision: A world where innovation is unbound

Portainer envisions a world where the barriers to container adoption are eliminated, where every individual and organization can fully leverage the power of containers without fear or frustration.

With Portainer, the complexity of container management fades into the background, allowing innovation to take center stage.

Why Portainer?

- Simplicity: Portainer’s user-friendly interface is designed with simplicity at its core. It eliminates the need for extensive training, allowing teams to get up and running quickly.

- Universality: Portainer is agnostic, supporting a wide range of container technologies and environments. It provides a single pane of glass to manage your entire container ecosystem, regardless of the underlying infrastructure.

- Empowerment: Portainer democratizes container management. It empowers developers, DevOps teams, and IT operations to take control of their environments without needing to become container experts.

- Community & Support: Built on a foundation of open-source collaboration, Portainer is backed by a passionate community and a robust support network, ensuring that you’re never alone on your container journey.

Portainer is a universal container management platform that doesn't lock you into a single technology or vendor

Simplify the deployment, troubleshooting, and security of containerized applications across your environments

Hear from our Customers

“Application teams that had zero knowledge of Kubernetes now work completely autonomous and release their applications without worries throughout the day.”

"Portainer has allowed us to get our apps up and running in our Kubernetes environment quickly and easily. Our deployment times have dropped significantly and we’re seeing less production errors. It’s a great product." Read more

“Our goal was to maintain our Docker infrastructure while adding the ability for RBAC for developers to view logs in multiple environments while not having advanced privileges. Portainer just works.” Read more

Join the Revolution

Portainer is more than just a product; it’s a movement towards simplicity, accessibility, and empowerment in the containerization space. Join us in revolutionizing the way the world manages containers - because with Portainer, the future of container management is not just for the experts; it’s for everyone.