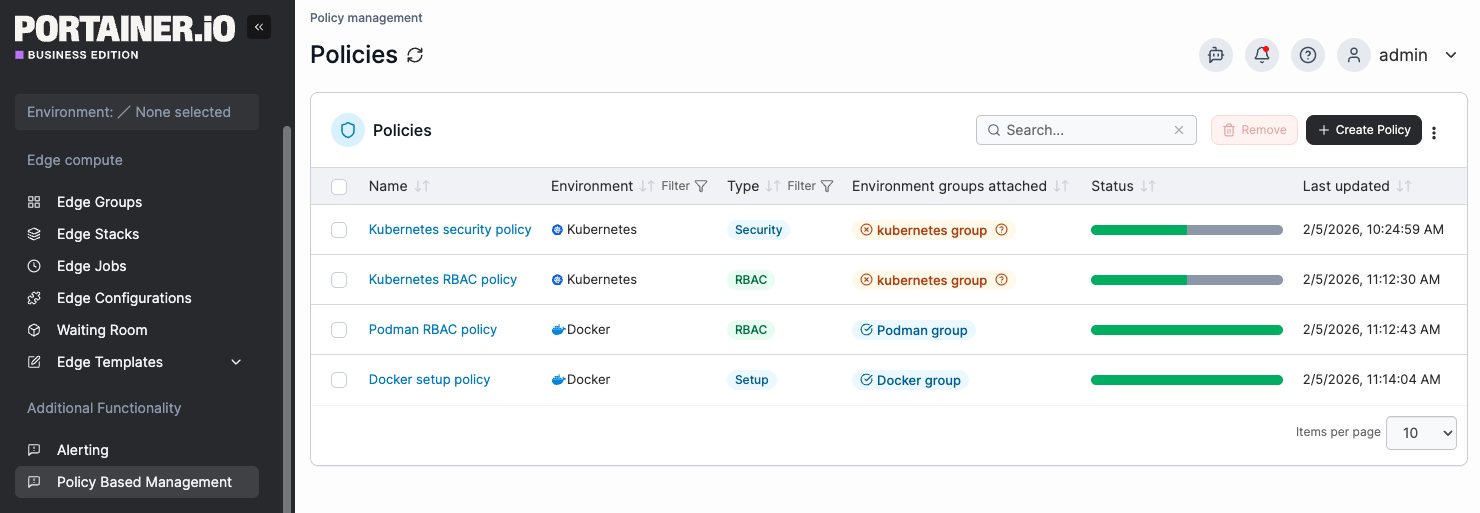

With the release of version 2.38 STS, Portainer has launched a new capability that we are calling Fleet Governance Policies. With this new feature, Portainer helps you configure your container clusters and secure them in a standardized manner: across regions, cloud providers, and diverse development environments.

Context

First, containers standardized how we package and distribute applications. Then container orchestrators, such as Kubernetes, standardized how we launch replica sets of containers across diverse pools of compute capacity. But now IT organizations must tackle Kubernetes sprawl. Most IT technical organizations are now running tens, or even hundreds of Kubernetes control planes. There are a few reasons why:

- It is not safe to have different business division workloads sharing the same Kubernetes cluster. While Kubernetes does come with some basic mechanisms that allow for shared usage patterns, these were never designed as hard security boundaries. In fact a recent RBAC bypass issue that allows for executing commands in any pod in the cluster was closed as "Working as Intended".

- Shared clusters inevitably lead to capacity conflicts or performance issues that motivate teams to split their workloads up across multiple clusters.

- In the cloud, running a single large cluster tends to overwhelm cloud provider limits and quotas. Cloud providers resort to telling customers to split their largest workloads across multiple child accounts that operate in different infrastructure partitions. Each of these partitions then requires it's own Kubernetes cluster.

- Even if your software and engineering org structure are simple enough to effectively share a single Kubernetes cluster, you will often end up running multiple Kubernetes clusters for geographical scaling purposes. Kubernetes is not designed for high latency between control plane and nodes, so if you have multiple cloud regions, or multiple geographically distributed data centers, then they should each have their own Kubernetes control plane.

- Last but not least, development and QA workloads should usually not be placed alongside production workloads. The code you are actively working on is far more likely to have serious performance issues or bugs that could impact the reliability of production. Developers should also be given their own isolated environments to test proposed code on.

Because of these factors, it's not uncommon for the largest IT companies to end up with thousands of clusters. How do they manage all these clusters?

Governing Kubernetes sprawl

There are two stages to governing Kubernetes sprawl. The first stage is typically accomplished by creating an official infrastructure as code template, then mandating that engineering teams deploy their Kubernetes clusters using this pre-vetted template. This approach scales fairly well at first, especially if you have an internal developer portal that allows teams to easily provision the template with minimum effort. But there are still two major issues:

- Updates and improvements to policy - Even with a single shared Kubernetes template that you use as the starting point for all clusters, what happens when you want to update that template? Perhaps a new version of Kubernetes comes with new APIs that must be considered in your RBAC policy. Or a potential security issue is discovered that must be prevented using a new Gatekeeper rule. Even if you update your base template for Kubernetes, you are still reliant on each team to individually learn about this update, then execute the update on their clusters to ensure the clusters match the latest version of the template. In large IT orgs you will always have some percentage of clusters that are running behind on updates, unprotected by your latest security policies.

- Drift from intended policy - Giving a large, decentralized org access to a shared template for Kubernetes does not guarantee that they will use that template properly. For example, let's say your Kubernetes template comes with OPA Gatekeeper preinstalled, and several Gatekeeper polices that are designed to limit Kubernetes usage to a known safe security posture. However, some of your teams are unhappy with the intended security posture and decide to modify their local OPA Gatekeeper policies to no longer perform required security checks. This results in drift that potentially endangers your workloads.

Because of these issues, enterprise Kubernetes adopters realize they need to move on to stage two of governance. That's where Portainer Fleet Governance comes into the picture.

Portainer uses a hub and spoke model in which each Kubernetes cluster runs a lightweight Portainer agent that connects back to the central Portainer server. The Portainer server collects info about all your Kubernetes environments, but it also acts as a command and control center. Portainer Fleet Governance is built on top of this powerful capability. As a Portainer admin you can use the Portainer server interface to install policies on any environment that is running an agent connected to your Portainer server. In the case of our "Security Constraints" policy, attaching this policy to an environment automatically installs OPA Gatekeeper, as well as Gatekeeper rules to implement security constraints that you control in Portainer interface.

When you update a policy, the changes automatically propagate to all connected environments that have that policy attached. This means that if you need to upgrade OPA Gatekeeper, or change a gatekeeper rule, you can make a change in one place - in your Portainer Server UI - and your entire fleet receives the update.

Additionally, environments that are running the Portainer agent use a polling process to check back with the Portainer server and remediate drift from the intended state. So even if an important security policy is manually removed from the environment, the agent will detect this and reinstall the policy automatically.

Portainer’s Fleet Governance is a solution to the problem of Kubernetes sprawl. It gives administrators a way to install consistent settings and policies across an entire fleet, update these settings and policies retroactively, and automatically remediate drift from the intended setting and policy state.

Comparison to existing solutions

There are some existing approaches in the Kubernetes ecosystem that attempt to address parts of this problem space, but most of them fall short once you zoom out to fleet scale.

- Cluster-local policy engines - Tools like OPA Gatekeeper or Kyverno are excellent at enforcing policy inside a single standalone Kubernetes cluster. But they are not fleet management tools. They do not help you decide which clusters should have which policies, nor do they provide a central place to roll out changes, audit coverage, or remediate drift across hundreds or thousands of clusters. You still need a separate system to manage lifecycle, versioning, and consistency at scale.

- GitOps-only approaches - GitOps works well for declarative workloads and cluster add-ons, but it assumes a level of process maturity that many organizations do not yet have. It also assumes that every cluster is wired correctly to the right repositories, credentials, and pipelines. When clusters drift, credentials expire, or teams accidentally break the pipeline, then GitOps alone has no enforcement mechanism. Portainer Fleet Governance does not replace GitOps. But it does complement it by providing an authoritative control plane that can enforce baseline policy even when Git workflows break down.

- Cloud-provider-specific governance - Managed Kubernetes offerings typically provide policy and security tooling, but only within their own ecosystem, and even then often only partially. Cloud provider specific solutions do not extend cleanly across multiple cloud accounts, different cloud providers, on-premises clusters, edge environments, or developer laptops. As soon as an organization becomes hybrid or multi-cloud, these tools fragment into separate silos. On the other hand, Portainer Fleet Governance is infrastructure-agnostic and applies the same controls everywhere an agent can run.

- Custom internal platforms - Some large organizations build their own governance layers on top of Kubernetes. These efforts are expensive, slow to evolve, and difficult to maintain as Kubernetes itself changes. Policy logic often becomes tightly coupled to internal assumptions, making it hard to adapt to new environments, teams, or regulatory requirements. Portainer Fleet Governance provides a maintained, productized control plane without locking customers into a single infrastructure model.

Portainer Fleet Governance is designed for the reality of modern Kubernetes sprawl. It centralizes intent, distributes enforcement, and continuously reconciles reality back to policy. For organizations that operate Kubernetes at scale, across teams and environments, it closes a gap that existing tools leave open.

Where Portainer is going next

Portainer Fleet Governance is the foundation for a roadmap of future effort that we have planned in this space. There are more solutions that we want to provide for fleet operators:

- Additional policy types - You should be able to try out OPA Gatekeeper, Kyverno, or other policy enforcement engines, side by side if you wish. Portainer aims to walk a careful line between being opinionated (offering you default policies that just work out of the box), and flexible (allowing you to pick and choose exactly how you want governance to work). If there is a policy type that you want us to add, please let us know!

- Workload auditing and alerting - It's one thing to install a policy onto a Kubernetes cluster, but what are the side effects of the policy? How many environments are already running workloads that breach this policy? What should happen to these workloads? Should they stay running or should they be stopped? Portainer will audit workloads to give you a realistic preview of what will happen when you apply a policy. It will also alert you if a workload breaches policy.

- GitOps integration - Portainer currently takes direct control of your remote environments via the Portainer agent. We plan to introduce an intermediate review step, with optional GitOps integration. You will be able to define policies using the Portainer UI, but then review the policy as code manifests and approve their merge into an administrative git repo. The git repo will then be used by the Portainer agent as the basis for declarative GitOps reconciliation across all your environments.

At Portainer, we strongly believe that container orchestration should be stress free. Although some sprawl and complexity is inevitable, there is no reason this needs to result in chaos. If you have the right tool in your hands, you will be empowered to solve your problems with finesse. We believe that Portainer is that tool. We are proud to offer you Fleet Governance Policies, and we are excited to hear your feedback and suggestions on what we can do to better enable your container orchestration needs.